Anyone who has worked on the production side of virtual environments can attest that content creation and scene development is a costly and time consuming endeavor.

Depending on the complexity and fidelity of the scene, production can easily become the highest expense of the project. In more traditional virtual environments such as Unreal 3, CryEngine, Big World, Arma3, and Delta3D, highly skilled graphic artists are required to create the static meshes using Maya, 3DSMax, or Blender.

People have to create textures that are in alignment with resolution and quality standards of the target platform. The process is much like a movie production and takes exhaustive planning.

The Second Life and OpenSimulator platforms are different. Content creation has been democratized to allow anyone with the will to learn to contribute to a scene. The labor costs may be lower but the time to produce can still be extended. Further, high quality content still has to come from experienced creators.

A labor-saving approach

The US Army Research Laboratory’s Simulation and Training Technology Center has been experimenting with automation to try to reduce the time it takes to create and ingest content into a virtual environment. The objective of this line of research is to attempt to reduce the amount of time and labor — in other words, money — it takes to produce a usable virtual recreation of a real world environment, called an operational environment.

The US Army Research Laboratory’s Simulation and Training Technology Center has been experimenting with automation to try to reduce the time it takes to create and ingest content into a virtual environment. The objective of this line of research is to attempt to reduce the amount of time and labor — in other words, money — it takes to produce a usable virtual recreation of a real world environment, called an operational environment.

“Usable†is defined as equally interactive as a traditionally produced static mesh object or primitive-based build. For example, a house imported into the scene using the automated process would still need to have working doors and interior spaces.

The Technology Center recently funded the University of Central Florida’s Institute for Simulation and Training to investigate the use of ground based LIDAR — Laser Imaging, Detection and Ranging — to rapidly scan an operational environment and produce a scene for a virtual environment.

The workflow that has resulted from this investigation has produced valuable information we have used to recreate areas of the University of Central Florida campus.

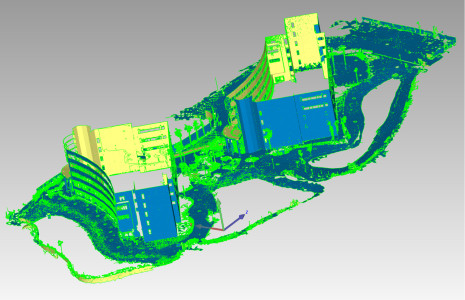

The first step in the workflow is to scan the operational environment. The university chose to use the FARO scanning system mounted on a tripod to scan an area  located in the Orlando Research Park. Multiple scans were performed and later stitched together in software. The resulting point cloud can be seen in the image below. The FARO system also includes the ability to capture digital photos so that textures can be created later.

The next step in the workflow process is to convert the point cloud data into a mesh. This is a heavy task for a computer and takes many hours of processing on a modern desktop PC. The software used for this task was and the output is shown below.

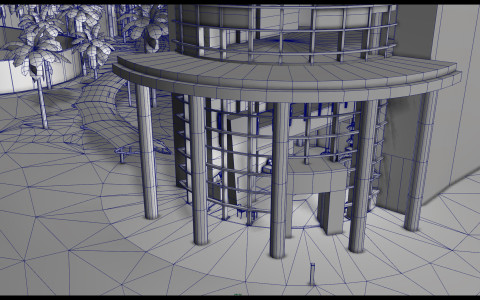

Even though this is now a mesh model, the polygon count is in the millions and much too high to be usable in a virtual environment.

The third step in the workflow is to decompose this high density model into a lower polygon count mesh model using Maya.

After the decomposition is complete, the model is saved to either a FBX format or Collada 1.4 file. A rendering of the lower polygon count model is shown below.

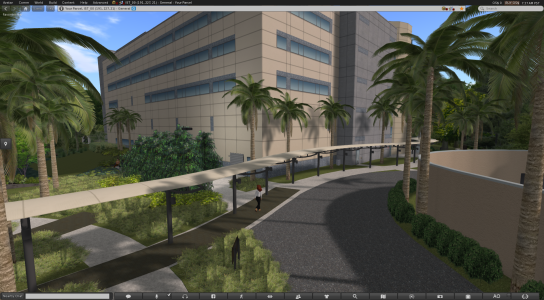

Since the output of this last step of the workflow is a generic mesh model, it can be imported into any modern game or virtual environment engine.

To test this proof of concept, we used the Military Open Simulator Enterprise Strategy grid, also known as MOSES. We imported the Collada mesh file to a standard region and added background props such as foliage.

Basic textures were applied and a simple scene was created, which you can see below. The two multi-story buildings reside within a fenced area and the entire footprint fits on a single standard sim at 1:1 scale.

This proof of concept is considered a success and we plan to continue the exploration of using ground based LIDAR technology for more testing and evaluation.

The uses for this technique go beyond military applications.

This technology could be applied to rapidly create any large space for use in domain–relevant training for first responders, for example. Instead of generic office buildings and urban landscapes, first responders could be presented with a virtual environment that closely represents their actual areas of responsibility.

Using this technique, early workflow data indicate a reduction of effort by over 60 percent as compared to the creation of a similar scenario using traditional techniques. It is believed that with further investigation, the amount of effort it takes to produce a similar scene could be reduced.

Dr. Charlie Hughes, Dr. Lori Walters, and Alexi Zelenin at the University of Central Florida’s Synthetic Reality Lab contributed to this project, as did Gwenette Sinclair and Jessica Compton of the Virtual Worlds Research Lab at the US Army Research Laboratory’s Simulation and Training Technology Center.

OpenSim users may download a copy of an OAR export file of the region discussed in this article from our website here: http://militarymetaverse.org/content/

- MOSES calls for new foundation to move OpenSim forward - September 27, 2016

- US military turns to Halcyon branch of OpenSim - August 16, 2016

- LIDAR accelerates virtual building - October 11, 2014