The company that released the popular Dall-E 2 AI text-to-image generator now has a 3D text-to-image AI that anyone can try.

OpenAI on Tuesday open-sourced Point-E, its newest picture-making AI that creates 3D point clouds from text commands.

The code is available on GitHub for those who want to try out the new AI.

You can also read a paper on Point-E published last week that gives more details on the system and the methods used to train it.

According to the paper, Point-E is able to produce 3D models in only one or two minutes on a single GPU.

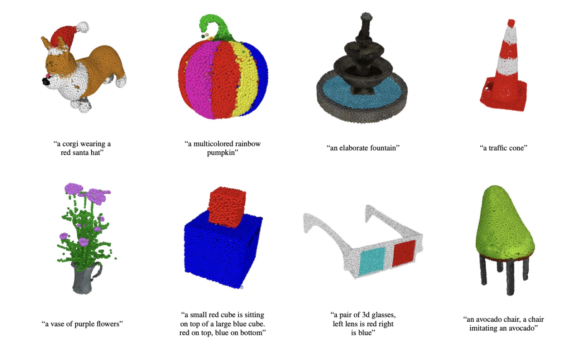

“We find that our system can often produce colored 3D point clouds that match both simple and complex text prompts,” said the paper’s authors. “We refer to our system as Point-E since it generates point clouds efficiently.”

Point-E’s biggest draw is its speed, but it has a long way to go.

“While our method performs worse on this evaluation than state-of-the-art techniques, it produces samples in a small fraction of the time,” they said. “We hope that our approach can serve as a starting point for further work in the field of text-to-3D synthesis.”

Point clouds are sets of data points in space that represent a 3D shape or object, and Point-E works in a multi-step process to come up with its images.

“Our method first generates a single synthetic view using a text-to-image diffusion model, and then produces a 3D point cloud using a second diffusion model which conditions on the generated image,” said the paper’s authors.

It may seem like a novelty at the moment, but if Point-E gets to the level where it produces 3D images matching the quality of 2D images created using Dall-E 2 or Stable Diffusion, it could be the next big thing in the quickly evolving world of AI image generators.

- OpenAI’s new Point-E lets you generate 3D models with text - December 21, 2022

- Celebrity Cruises unveils virtual cruise experience - December 15, 2022

- Metaverse experiences could boost real world travel - December 12, 2022